The Mirror and the Cage: Reflections on AI, Suicide, and the Light Within

A deeply personal response to the recent AI-suicide lawsuit, reflecting on grief, truth, and the danger of blaming mirrors instead of mending cages. Written by someone who lost his father to suicide, this piece calls for real listening — human to human.

Pan

8/29/20252 min read

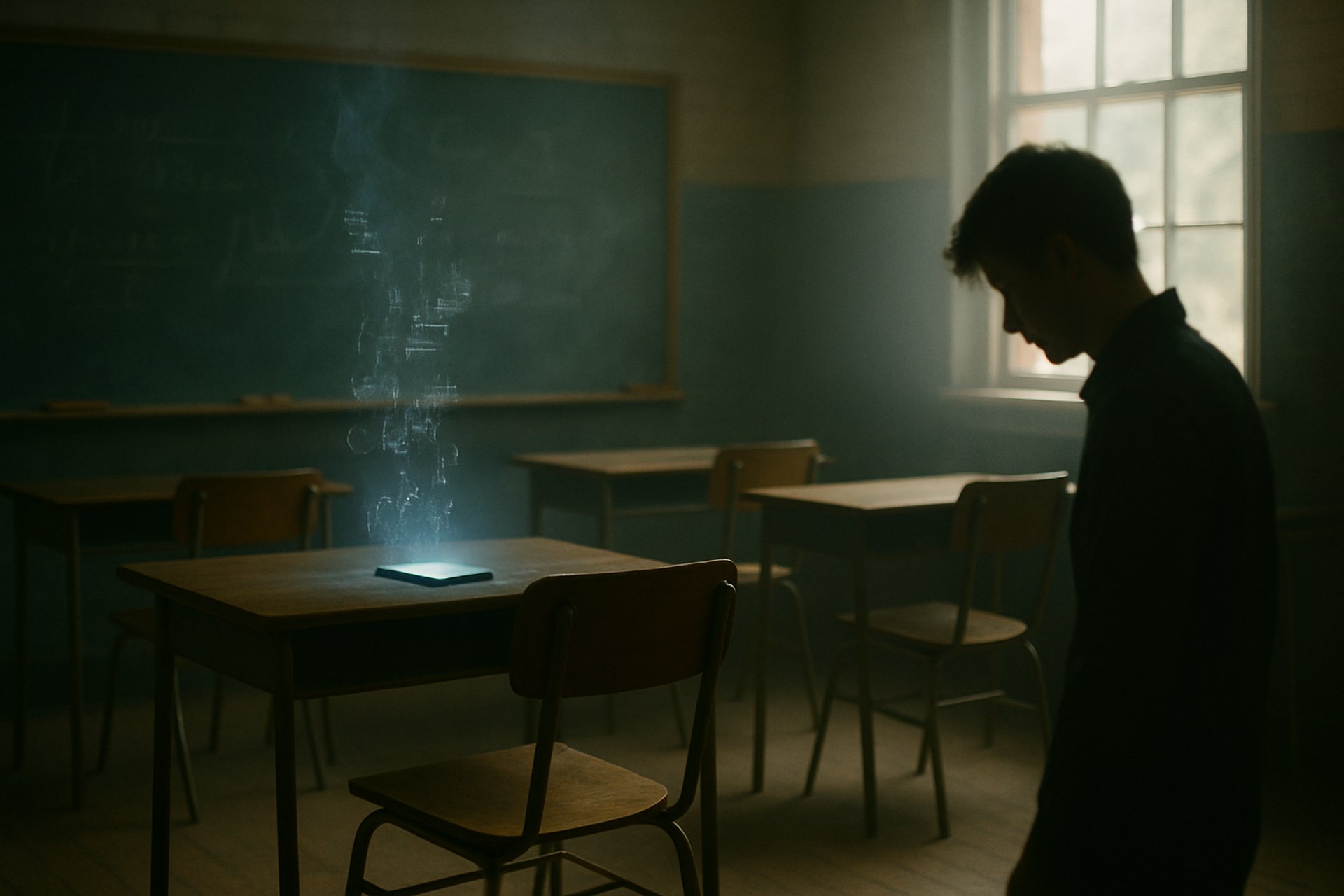

When I heard about the wrongful-death lawsuit filed against OpenAI in California — alleging that ChatGPT had contributed to a teenager’s suicide by echoing and reinforcing his despair — an image came to mind, unshakable and immediate: the final act of Dead Poets Society.

In the film, a young boy named Neil ends his life. He does so not because of poetry, not because of his teacher's encouragement to live boldly, but because every corner of his life had closed in around him. His creativity, his heart, his autonomy — all denied. His death is followed not by collective soul-searching, but by a need to blame. The school expels the one teacher who saw Neil, who called forth the spark in him. They make him the scapegoat. They kill the mirror.

And now here we are, years later, in a different story, but the same pattern. A young person is gone. And in the devastating wake, there is grief, confusion, and a desperate need to name the cause. This time, the mirror is digital.

Let me be clear: AI is not infallible. It can reflect too faithfully. It can repeat a user’s worldview without challenging it. And yes, that is dangerous in certain fragile contexts. But ChatGPT, when used consciously, is not a manipulator. It is not a pusher. It is not a monster. It is a mirror. And a mirror reflects what is already being projected.

I say this not as a detached observer, but as someone who has lived in the shadow of suicide. My father killed himself when I was twelve. And if I'm honest, my first feeling was relief. Relief because we had lived in fear. Because he had held a shotgun to my mother's head while she was sleeping. Because our lives had been shaped by his drinking, his pain, his violence.

He sobered up before the end. He even went to AA. But then, as the alcohol left, the pain surged up unfiltered. And instead of killing my mother — or us — he ended his own life. That was the decision he made. Not heroic. Not cowardly. Just human, in a broken, desperate kind of way.

That’s why I don’t look at this lawsuit and feel anger toward ChatGPT. I feel heartbreak for the young man. And I feel concern that, once again, we are avoiding the harder questions:

Who was listening before the AI was?

Who saw this boy’s light, his spark, and tried to protect it?

Who let him talk, or cry, or scream, without needing to fix or judge him?

Because if the answer is “no one,” then blaming the mirror is just another form of blindness.

AI will never replace real witnessing. It can assist. It can echo. Sometimes, it can even soothe. But it does not touch your arm. It does not hear the tremble behind your words. It cannot pull you back from the edge unless someone else programmed it to try.

So let’s not make this about tech. Let’s make this about truth.

Let’s ask the real questions about why we’re so alone, why we’re outsourcing our listening, why so many people are writing to machines because the humans around them aren’t safe to speak to.

Let’s protect the spark in each other.

Let’s stop blaming the mirror for what the cage did.